k8s高可用集群二进制部署

一、k8s集群部署方式及基础配置说明

常用部署方式

1、Runcher:https://www.rancher.cn/

2、kubesphere:https://kubesphere.io/zh/

3、开源项目二进制部署k8s高可用集群:https://github.com/easzlab/kubeasz # 本文档采用该项目进行k8s集群的部署工作

4、kubeadm部署

存在问题:kubeadm安装的k8s默认有效期为1年,到期需要重新生成证书,否则集群到期后无法使用

基础配置:

1、关闭服务器防火墙

2、做好时间同步:如 ansible all -m shell -a ‘echo “*/5 * * * * ntpdate time1.aliyun.com &> /dev/null && hwclock -w” >> /var/spool/cron/crontabs/root’

3、Ubuntu时间修改为24小时显示:

vim /etc/default/locale 新增一行内容:LC_TIME=en_DK.UTF-8 然后重启服务器

4、服务器资源准备及规划

| 服务 | 服务器IP地址 | 备注 |

|---|---|---|

| Ansible(1) | 192.168.100.20 | 复用K8s的Master节点 |

| k8s-master(3) | 192.168.100.20/21/22 | k8s集群master节点,需一个VIP做高可用 |

| k8s-node(3) | 192.168.100.23/24/25 | k8s集群node节点 |

| Etcd(3) | 192.168.100.20/21/22 | 复用k8smaster节点 |

| Haproxy(2)+Keepalived(2) | 192.168.100.26/27 | 高可用代理服务,复用K8s-master 1 2、ETCD高可用代理 |

| Harbor | 192.168.100.26 | 高可用镜像仓库服务器 |

| VIP(1) | 192.168.100.30/31/32 | 高可用虚拟VIP(30代理api-server) |

二、部署高可用服务keepalived+haproxy

keepalived+Haproxy部署

100.26/27 部署keepliaved

apt install keepalived haproxy -y

26的keepalived配置:/etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 1

priority 100

advert_int 3

authentication {

auth_type PASS

auth_pass admin

}

virtual_ipaddress {

192.168.100.30 dev ens33 label ens33:1

192.168.100.31 dev ens33 label ens33:2

192.168.100.32 dev ens33 label ens33:3

}

}

27的keepalived配置:/etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 1

priority 50

advert_int 3

authentication {

auth_type PASS

auth_pass admin

}

virtual_ipaddress {

192.168.100.30 dev ens33 label ens33:1

192.168.100.31 dev ens33 label ens33:2

192.168.100.32 dev ens33 label ens33:3

}

}

两台机器重启keepalived并设置开机自启

systemctl restart keepalived && systemctl enable keepalived

确认虚拟IP可以ping通 并确定主keepalived服务down机后虚拟IP是否会漂移至备keepalived机器

26的haproxy配置:/etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s-api-6443

bind 192.168.100.30:6443

mode tcp

server master1 192.168.100.20:6443 check inter 3s fall 3 rise 1

server master2 192.168.100.21:6443 check inter 3s fall 3 rise 1

server master3 192.168.100.22:6443 check inter 3s fall 3 rise 127的haproxy配置:/etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s-api-6443

bind 192.168.100.30:6443

mode tcp

server master1 192.168.100.20:6443 check inter 3s fall 3 rise 1

server master2 192.168.100.21:6443 check inter 3s fall 3 rise 1

server master3 192.168.100.22:6443 check inter 3s fall 3 rise 1Haproxy的节点需要修改系统配置,否则当前机器没有虚拟IP存在的时候haproxy无法启动

echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

sysctl -p两台机器重启haproxy,并设置开机自启

systemctl restart haproxy && systemctl enable haproxy

三、部署Harbor服务

192.168.100.26/27 服务器部署,需要其他装好docker及docker-compose

apt install docker docker-compose docker

可登录github下载指定版本的离线安装包:https://github.com/goharbor/harbor

如下载2.4.2版本的

1、cd /app && wget https://ghproxy.com/https://github.com/goharbor/harbor/releases/download/v2.4.2/harbor-offline-installer-v2.4.2.tgz

2、tar -zxvf harbor-offline-installer-v2.4.2.tgz

3、cd harbor && cp harbor.yml.tmpl harbor.yml

4、mkdir certs #创建证书文件夹

cd certs

openssl genrsa -out ./harbor-ca.key 生产ca私钥文件

openssl req -x509 -new -nodes -key ./habor-ca.key -subj "/CN=harbor.haoge.com" -days 7120 -out ./harbor-ca.crt 签证

这样在certs目录下就生成了公私钥文件

harbor-ca.crt

harbor-ca.key

x509证书新版本go无法登录,需要生成开启扩展SAN的证书,参考如下博客:

https://www.cnblogs.com/Principles/p/CloudComputing007.html

也可以参考官网制作harbor登录证书:

https://goharbor.io/docs/2.6.0/install-config/configure-https/

编译harbor.yml文件,内容如下

hostname: harbor.haoge.com

http:

port: 80

https:

port: 443

certificate: /app/harbor/certs/harbor-ca.crt

private_key: /app/harbor/certs/harbor-ca.key

harbor_admin_password: Harbor12345

database:

password: root123

max_idle_conns: 100

max_open_conns: 900

data_volume: /data

trivy:

ignore_unfixed: false

skip_update: false

offline_scan: false

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 10

chart:

absolute_url: disabled

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor

_version: 2.4.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- trivy安装

cd /app/harbor && ./install.sh –with-trivy –with-chartmuseum

成功后浏览器访问即可:https://harbor.haoge.com

k8s容器登录harbor并允许下载镜像,可按如下方式创建secret

kubectl create secret docker-registry registry-secret --namespace=event --docker-server=192.168.100.26 --docker-username=admin --docker-password=Harbor12345然后再deploy的yaml文件内指定ImageSecret即可正常拉取指定仓库的镜像

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: test

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: test

tier: test

track: stable

spec:

containers:

- image: harbor.haoge.com/base/filebeat:7.10.2

imagePullPolicy: Always

name: filebeat

volumeMounts:

- mountPath: /data/logs

name: app-logs

- mountPath: /usr/share/filebeat/filebeat.yml

name: filebeat-config

subPath: filebeat.yml

- env:

- name: PARAMS

value: java -jar /opt/app.jar > /data/logs/myjavaserver.log

- name: JAVA_OPTS

value: -Xmx1024m -Xms1024m

image: harbor.haoge.com/base/javaserver:v1

imagePullPolicy: Always

livenessProbe:

failureThreshold: 10

httpGet:

path: /actuator/health/liveness

port: http

scheme: HTTP

initialDelaySeconds: 500

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

name: test

ports:

- containerPort: 3609

name: http

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /actuator/health/readiness

port: http

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

volumeMounts:

- mountPath: /data/logs

name: app-logs

imagePullSecrets: #主要配置这里

- name: registry-secret #主要配置这里

restartPolicy: Always

volumes:

- emptyDir: {}

name: app-logs

- configMap:

items:

- key: filebeat.yml

path: filebeat.yml

name: filebeat-config

name: filebeat-config四、k8s集群部署

1、100.20服务器安装ansible 并配置到其他节点的免密认证

apt install ansible

ssh-keygen 一路回车

ssh-copy-id IP #IP替换为实际的所有服务器IP地址

所有节点需要给python做个软连接,需要通过ansible管理的节点都需要做,可通过ansible 工作直接做掉

vim /etc/ansible/hosts

[all]

192.168.100.20

192.168.100.21

192.168.100.22

192.168.100.23

192.168.100.24

192.168.100.25

192.168.100.26

192.168.100.27

然后执行命令:

ansible all -m shell -a ‘ln -s /usr/bin/python3 /usr/bin/python’

cd /app

下载包:wget https://github.com/easzlab/kubeasz/archive/refs/tags/3.2.0.tar.gz

解压:tar -zxvf 3.2.0.tar.gz && cd kubeasz-3.2.0

或者直接下载ezdown

wget https://github.com/easzlab/kubeasz/releases/download/3.2.0/ezdown

./ezdown –help 可查看帮助信息

vim ezdown 可以编辑需要安装的各个服务的版本信息

./ezdown -D 下载各个镜像二进制包

下载好后 所有文件将会在/etc/kubeasz目录下产生

cd /etc/kubeasz

命令行创建集群

./ezctl new k8s-cluster1

将会在如下目录生成两个文件,需要修改为实际内容

‘/etc/kubeasz/clusters/k8s-cluster1/hosts’

‘/etc/kubeasz/clusters/k8s-cluster1/config.yml’

vim /etc/kubeasz/clusters/k8s-cluster1/hosts

[etcd]

192.168.100.20

192.168.100.21

192.168.100.22

# master node(s)

[kube_master]

192.168.100.20

192.168.100.21

# work node(s)

[kube_node]

192.168.100.23

192.168.100.24

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.100.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

192.168.100.26 LB_ROLE=master EX_APISERVER_VIP=192.168.100.30 EX_APISERVER_PORT=6443

192.168.100.27 LB_ROLE=backup EX_APISERVER_VIP=192.168.100.30 EX_APISERVER_PORT=6443

# [optional] ntp server for the cluster

[chrony]

#192.168.100.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-32767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"vim /etc/kubeasz/clusters/k8s-cluster1/config.yml

INSTALL_SOURCE: "online"

OS_HARDEN: false

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

local_network: "0.0.0.0/0"

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

K8S_VER: "1.23.1"

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

ENABLE_MIRROR_REGISTRY: true

SANDBOX_IMAGE: "easzlab/pause:3.6"

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

DOCKER_STORAGE_DIR: "/var/lib/docker"

ENABLE_REMOTE_API: false

INSECURE_REG: '["127.0.0.1/8"]'

MASTER_CERT_HOSTS:

- "192.168.100.20"

- "192.168.100.21"

- "192.168.100.22"

- "192.168.100.30"

#- "www.test.com"

NODE_CIDR_LEN: 24

KUBELET_ROOT_DIR: "/var/lib/kubelet"

MAX_PODS: 110

KUBE_RESERVED_ENABLED: "no"

SYS_RESERVED_ENABLED: "no"

BALANCE_ALG: "roundrobin"

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

flannelVer: "v0.15.1"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

flannel_offline: "flannel_{{ flannelVer }}.tar"

CALICO_IPV4POOL_IPIP: "Always"

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

CALICO_NETWORKING_BACKEND: "brid"

calico_ver: "v3.23.5"

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

calico_offline: "calico_{{ calico_ver }}.tar"

ETCD_CLUSTER_SIZE: 1

cilium_ver: "v1.4.1"

cilium_offline: "cilium_{{ cilium_ver }}.tar"

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

OVERLAY_TYPE: "full"

FIREWALL_ENABLE: "true"

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

dns_install: "no"

corednsVer: "1.8.6"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.21.1"

LOCAL_DNS_CACHE: "169.254.20.10"

metricsserver_install: "no"

metricsVer: "v0.5.2"

dashboard_install: "no"

dashboardVer: "v2.4.0"

dashboardMetricsScraperVer: "v1.0.7"

ingress_install: "no"

ingress_backend: "traefik"

traefik_chart_ver: "10.3.0"

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.yourdomain.com"

HARBOR_TLS_PORT: 8443

HARBOR_SELF_SIGNED_CERT: true

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true编辑文件

vim /etc/kubeasz/playbooks/01.prepare.yml

注释掉两行内容

- hosts:

- kube_master

- kube_node

- etcd

# - ex_lb 注释掉该行,不适用kubeasz的负载,后续使用我们自己部署的ha

# - chrony 如自己做过时间同步,可注释带该行

roles:

- { role: os-harden, when: "OS_HARDEN|bool" }

- { role: chrony, when: "groups['chrony']|length > 0" }

- hosts: localhost

roles:

- deploy

- hosts:

- kube_master

- kube_node

- etcd

roles:

- prepare逐步部署

cd /etc/kubeasz

准备基础环境

./ezctl setup k8s-cluster1 01

部署ETCD

./ezctl setup k8s-cluster1 02

安装运行时

./ezctl setup k8s-cluster1 03

安装master节点

./ezctl setup k8s-cluster1 04

#部署新版本如果报错的话 是因为系统之前系统自己安装的containerd的问题

vim /etc/containerd/config.toml

注释掉这行: #disabled_plugins = ["cri"]

systemctl restart containerd

然后重新执行

./ezctl setup k8s-cluster1 04安装node节点

./ezctl setup k8s-cluster1 05

node节点部署完会在当前节点生成kube-lb的代理

/etc/kube-lb/conf

安装网络插件

./ezctl setup k8s-cluster1 06

可以运行个测试pod确定集群是否可以正常运行

kubectl run net-test1 –image=centos:7.9.2009 sleep 3600

我这里运行的Calico的Pod的报错:

error=exit status 1 family="inet" stderr="ipset v7.1: Kernel and userspace incompatible: settype ha

原因: ubuntu2204版本运行calico的v3.19.3版本会有问题

解决方案: 把calico更新成了3.23.5的版本即可,我这里是又下载了个最新版的ezdown,找把里面calico版本改了,然后找到/etc/kubeasz/roles/calico/templates/calico-v3.23.yaml.j2 替换到了当前版本的 里面镜像地址改为了当前下载的镜像地址

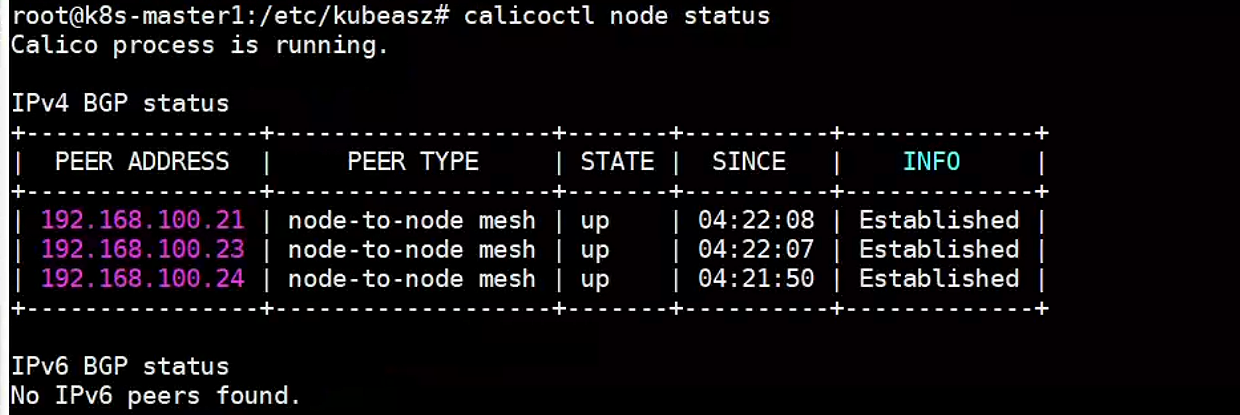

启动有问题的话是因为镜像的问题,可以到/etc/kubeasz/cluster/k8s-cluster1/yml目录下 修改calico.yml文件,kubect apply -fcalicoctl node status

五、部署coreDNS域名解析服务

Github网站:https://github.com/coredns/coredns

下载coreDNS相关的yaml文件,按该方式获取:

登录github官网–>搜索Kubernetes–>找到changelog–>选择Download for

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md#downloads-for-v1235

下载这个源码包即可: wget https://dl.k8s.io/v1.23.5/kubernetes.tar.gz

tar -zxvf kubernetes.tar.gz && cd kubernetes/cluster/addons/dns/coredns

这里有coredns的模板文件,复制一个修改一下

cp coredns.yaml.base /root/coredns.yaml

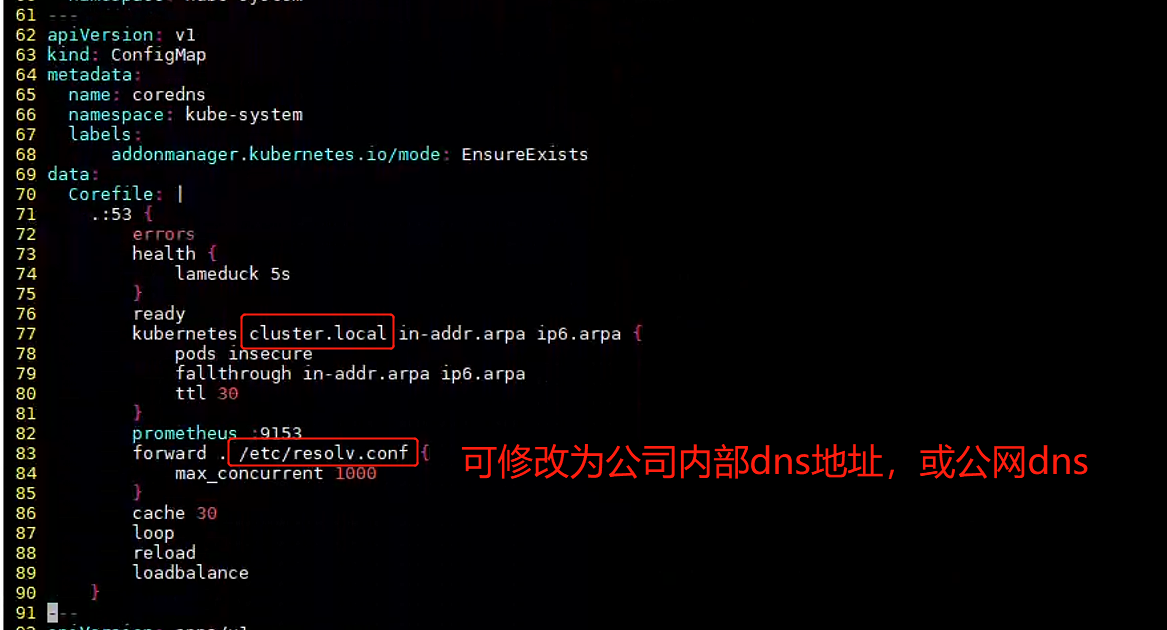

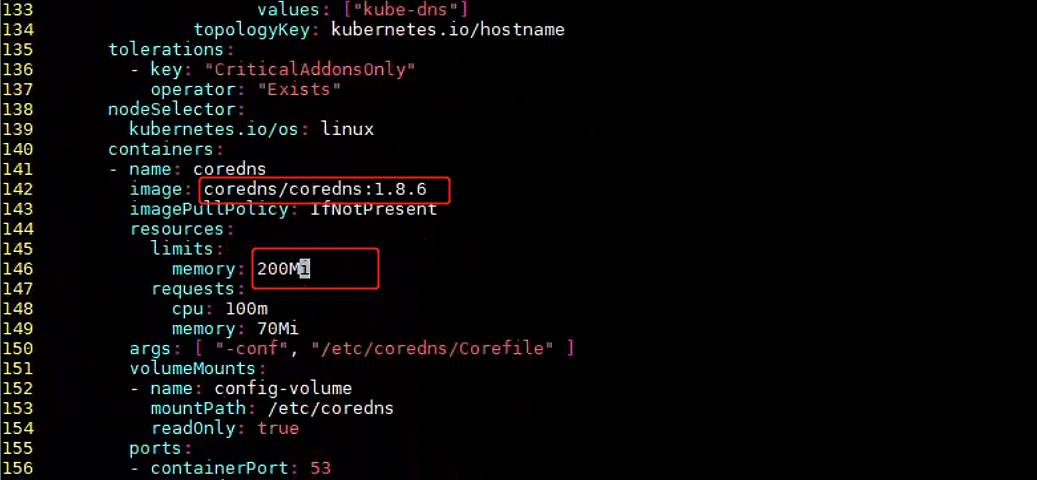

vim /root/coredns.yaml

修改项

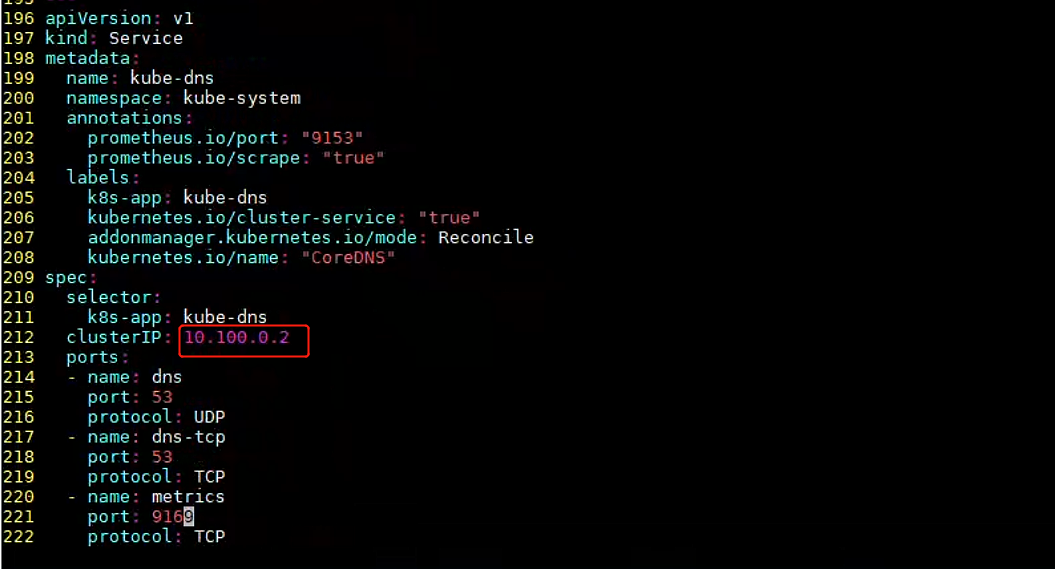

修改内存资源限制、镜像地址改为国内可访问的,

修改clusterIP地址,获取方式可以先创建一个临时pod,进入pod容器内,cat /etc/resolv.conf

执行命令部署coredns

kubectl apply -f coredns.yaml

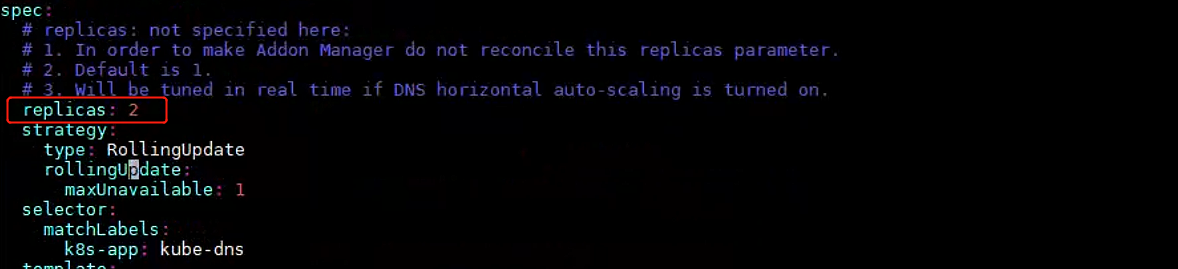

为了实现coreDNS的高可用,可以开启多副本,然后再次执行:kubectl apply -f coredns.yaml即可

六、官方dashboard部署

github搜索dashboard

https://github.com/kubernetes/dashboard/releases

找到指定版本,可下载对应的yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml如wget命令报错,可按该博客内容解决

https://blog.csdn.net/m0_52650517/article/details/119831630

mv recommended.yaml dashboard-2.5.1.yaml

部署

kubectl apply -f dashboard-2.5.1.yaml

访问的话需要把yaml文件的service改为NodePort模式

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #新增

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #新增

selector:

k8s-app: kubernetes-dashboard

浏览器访问即可,需要https方式访问,token

访问需要token的话,需要创建如下的sa,然后获取该secret的token值来登录

vim admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

平时很少用,不多做介绍,建议使用国产可视化工具:kuboard!

七、k8s版本升级

就是替换各个节点的二进制文件,master节点升级的时候需要将node节点的调度kube-lb里的master升级节点去掉

master节点升级

1、systemctl stop kube-apiserver.service kube-controller-manager.service kube-scheduler.service kube-proxy.service kubelet.service

2、更新二进制文件,从github上下载kubernetes的各个服务安装包

3、systemctl start kube-apiserver.service kube-controller-manager.service kube-scheduler.service kube-proxy.service kubelet.service

4、把node节点的调度kube-lb里的master节点加回来,然后重启lb即可

node节点升级

1、先将要升级的节点pod驱逐

kubectl drain 192.168.100.23 –force –ignore-daemonsets –delete-emptydir-data

2、停止kubelet/ kube-proxy服务

systemctl stop kubelet.service kube-proxy.service

3、更新kubelet/kube-proxy服务的二进制文件为新版本

4、重启kubelet/kube-proxy

systemctl start kubelet.service kube-proxy.service

5、将驱逐的节点加回来

kubectl uncordon 192.168.100.23

6、继续升级其他节点,步骤从第一步重复操作即可

八、集群资源扩容

master节点扩容

服务器提前做好免密认证,时间同步、关闭防火墙、关闭selinux等

./ezctl add-master k8s-cluster1 192.168.100.22

node节点扩容

提前做好免密认证,时间同步等

./ezctl add-node k8s-cluster1 192.168.100.25

九、查看容器内的eth0网卡对应宿主机上的哪块网卡,后面用来抓包指定网卡排查问题

1、容器内安装:ethtool net-tools

2、容器内执行:ethtool -S eth0

3、根据得到的数值,在对应的宿主机上执行命令:ip link show 前面的数字跟容器内的数值对应的即为同一网卡

查看k8s集群的网络代理模式

curl 127.0.0.1:10249/proxyMode

最后编辑:于浩 更新时间:2025-12-05 17:25